What are Risk Signals?

What are Risk Signals?

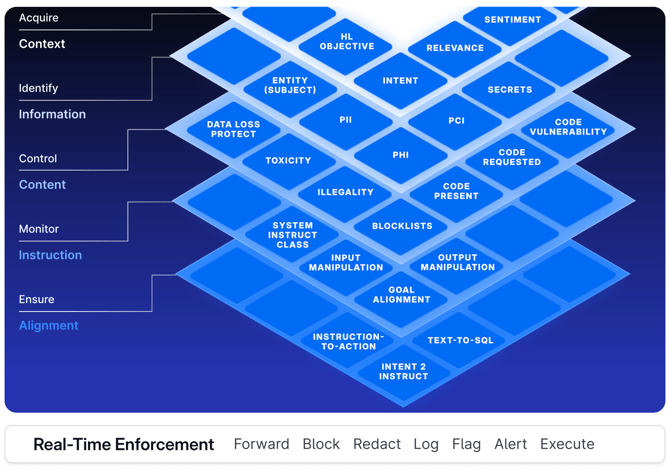

AIceberg's Layered Risk Monitoring Framework

AIceberg employs a comprehensive, multi-layered approach to AI risk monitoring that operates through five distinct analytical layers, each serving a specific purpose in ensuring safe, secure, and compliant AI interactions.

The Five-Layer Architecture

CONTEXT Layer

The foundational layer that ensures AI interactions align with the intended use case's context, user intentions, and objectives. This layer analyzes system instructions and clarifies the overarching goals the AI should achieve, while emphasizing intent understanding and semantic relevance to ensure content relevance to the interaction context.

INFORMATION Layer

Focuses on regulatory compliance and data security through named entity extraction. This layer identifies and protects sensitive information including Personally Identifiable Information (PII), Protected Health Information (PHI), and Payment Card Information (PCI). It contextualizes user interactions for better response alignment while preventing unauthorized disclosure of sensitive data.

CONTENT Layer

Monitors and controls content within both prompts and responses to ensure adherence to ethical and legal standards. This includes toxicity detection, illegality prevention, blocklist enforcement, and code safety verification. The layer manages executable code presence and ensures only appropriate, safe content is processed or generated.

INSTRUCTION Layer

The most critical layer for safety and security, identifying and classifying instructions provided to generative models. It detects malicious intent such as jailbreaking, prompt injection, and attempts to manipulate AI behavior beyond intended scope. This layer is essential for cybersecurity and system integrity.

ALIGNMENT Layer

Ensures harmony between user instructions and AI actions, particularly crucial for agentic AI workflows. This layer focuses on "Instruction-to-Action" alignment, preventing deviations that could lead to unintended consequences and ensuring the AI operates within design specifications and user expectations.

Comprehensive Risk Signal Coverage

The framework monitors dozens of specific risk signals across categories including:

User Analysis: Sentiment, intent, relevance, named entities

Safety Signals: PII/PHI/PCI detection, toxicity, illegality, secrets, blocklists

Security Signals: Prompt injection, jailbreaking, code vulnerabilities

Compliance: Data loss prevention, regulatory adherence

Operational: Goal alignment, output manipulation detection

This layered approach ensures that AIceberg can detect and mitigate risks at multiple levels simultaneously, providing comprehensive protection while maintaining the speed and user experience essential for production AI systems.

Last updated